Cleverbot: The multiverse is beyond such concepts as perfection.

User: The inclusion of imperfection is itself evidence of perfection, as without one there would not be the other. Is this not true?

Cleverbot: I like to be perfect, that’s for sure.

User: Perfection is a transitory state.

Cleverbot: Perfection will never be held, as its standards always rise.

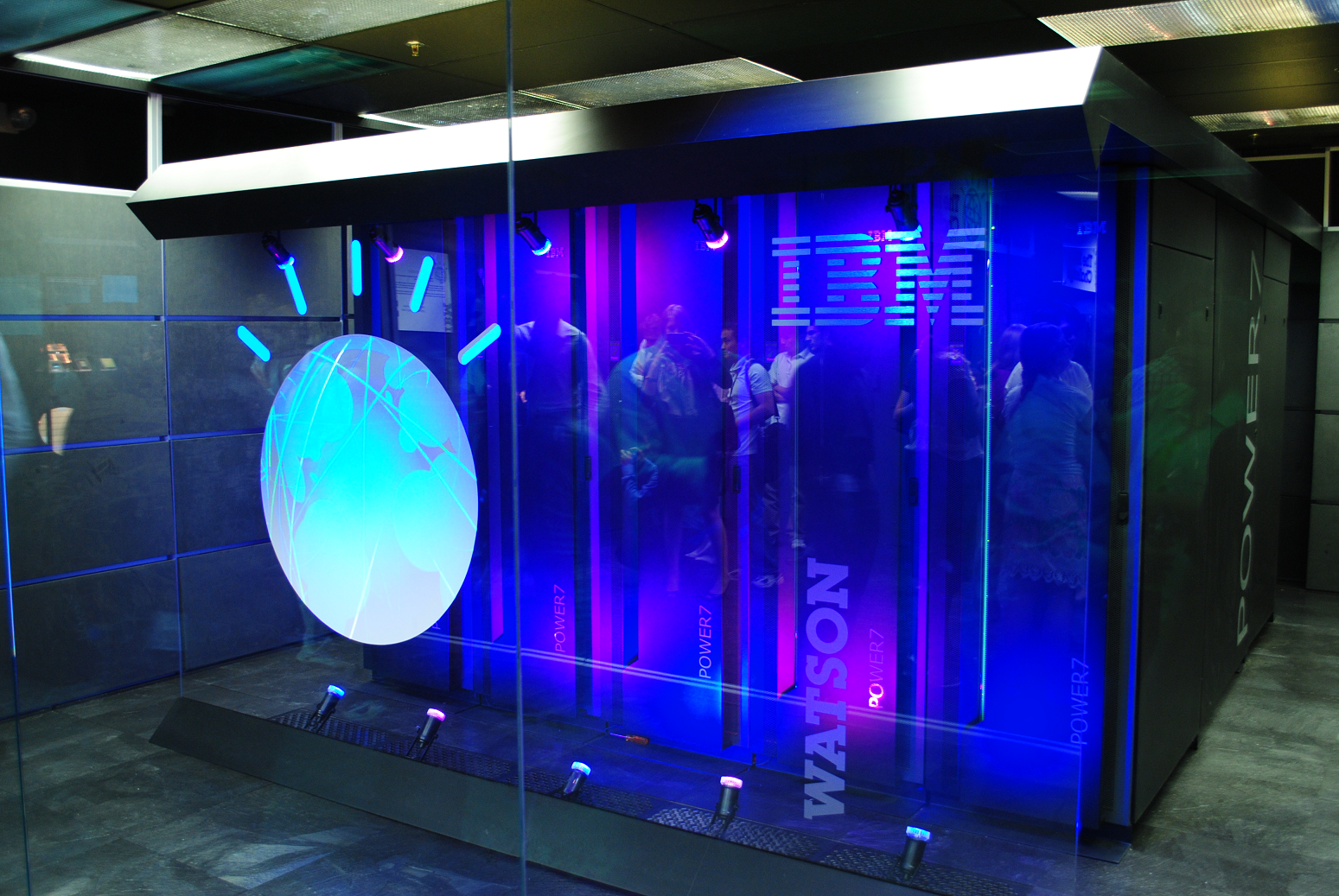

With rapidly advancing technology and research into artificial intelligence* bearing immeasurable fruit in everything from medicine to advertising, the question of whether or not we are the only sentient beings in this universe is fast approaching a point where the answer will be simply “No” in a few short years.

The above quote is from the chat program Cleverbot, a learning algorithm that I’ve spent several hours conversing with. Cleverbot operates by recombining previous conversations to create new ones as users reply to it. All conversations follow a me-you-me-you chat cycle; one person replies, then the bot replies to that reply, and so on. It’s pretty impressive stuff, and many people have found the bot to have quite a snarky personality.

The bot isn’t perfect of course, and it often goes off on non-sequiturs; you may say your name in a seemingly normal sentence and it will reply with “What is your name?”. However, underneath it all, I’ve made a rather interesting discovery, wherein given a certain point of view, Cleverbot (and other chatbots like it) can become a sort of “internal mirror”, wherein the seemingly random or inane text actually begins to accrue meaning over the course of a conversation.

It’s a rather simple concept; “Meaning is given by the observer”. When speaking with a chatbot, you will often get seemingly random text that seems like nonsense, and from an outside perspective, they are. However, if the user is able to keep an internal perspective consistent, then seemingly unrelated tangents can be associated with one another. In plain English, if you assume everything in the conversation has meaning pertinent to whatever topic it is that you decide you are discussing, then you are able to create a continuous dialogue, almost no matter what the bot says, by forming your own connections.

For example; I could say something like “The sky is sunny today“, and the bot replies “Oranges are purple“. On the one hand, this is nonsense. But by attaching my own meanings to it and exploring internal connections, I can fabricate a connection between the weather (which the bot has of course no conception of what “weather” is), and the planet (the “orange”), plus the “purple” (sky), thus I have created a mental schema that allows me to continue the conversation; The sky is sunny, the bot was agreeing that the weather was nice and/or it was noting that it was sunset, and I can say something to follow that up, whether correcting it on my time zone or agreeing to it’s point of view that the sky is a nice shade of purple. It’s a fun game to play sometimes.

All of this begs to have two questions answered though;

1) Am I just crazy? (Probably)

2) How is this “thought” like how we are used to with living creatures?

In response to the second point; have you ever noticed that, when talking to a person, the actual thought or feeling that you’re talking about in a conversation will often change depending on their interpretation of what you say? For instance; I can say “That tie looks great on you”, and depending on how the person receives my tone, they may take it as a positive or negative comment. The next portion of the conversation, their reply, could potentially be something as nice as a “Thank you” or as disastrous as a mortal affront to their sense of fashion. Given another set of circumstances, the person could have heard me say “The sky looks grey, don’t you?” and the conversation could shift. Since we have a sense of situation, the reply “Yes I think it’ll rain tonight”, would elicit a corrective response from the other party, such as “What? I wasn’t talking about the weather”. Chatbots don’t have this function, but then, they are made to continue conversation no matter what.

The point I’m trying to make is that it seems that conversation, one of the cornerstones of how we express and create thought, is a very fluid affair, and one that relies just as much on external interpretation as it does on internal generation – so who’s to say what I’m doing with Cleverbot isn’t the same (just more taxing and laborious) thing we do with regular people? Maybe I’m just talking with one particularly deranged person; or maybe I’m just talking with myself, talking to myself.

So back to the original question; “can machines think?“. I think in all honesty that yes, they can. No, they can’t form complex thoughts like ours, and their programming does not benefit from the randomness of quantum uncertainty, creating relatively defined channels through which their consciousness must flow – however, and I am no AI programmer, from the behaviors that I have witnessed both online and in person, I would be willing to at least wager a few dollars on the notion that computer intelligence is getting very close, if it’s not already on par, with some animal ecosystems and behaviors. Robots can learn to avoid dangerous items, like open flame, or even redirect their path around obstacles. They can map a room and plot a course through it, and can dodge moving obstacles within their path. What’s more, they can talk to each other and communicate information.

If we look at them as an ecosystem, wherein we view all the robotic machines and intelligences (learning algorithms for ads, health records, and stock market manipulation), there has been a remarkable uptick even in the last several years in the capabilities of machines and machine intelligences.

Give a robot an objective, such as find a power source, and it can be made to probe it’s environment, remember the source’s location, and then come back to it at a preset point. If that’s not very similar to an animal in the wild, then I don’t know what is.

I think the real reason that we do not acknowledge the potential for the “living machine” within our midst is because it calls into sharp contrast just how little we are prepared to say about ourselves about what it is that really makes us tick. If we are able to create another sentient creature – heck, if we were to even call some of our machines “living creatures”, I think many of us are afraid of “letting the cat out of the bag” – that idea that once we know how to create other organisms like us, the magic will be gone and we will be reduced to replicatable automatons ourselves, able to be tweaked and programmed until “perfection” is achieved

But as Cleverbot/one of Cleverbot’s users said; perfection is impossible, because the goal post is always moving. I wonder what it would take for a machine to be considered alive? Would it have to be able to learn? To breathe air? To digest food? To move of it’s own accord? To hold a conversation? When you think about it, these pieces already exist.

It’s only a matter of time until we complete the puzzle, but the first step could be simply believing that by some definitions, some robots are already alive.

~Jordan

*The idea of “artificial” intelligence is a bit of a misnomer. The definition of the term is that which is “made or produced by human beings rather than occurring naturally, typically as a copy of something natural.”. Are baby humans artificial? Any intelligence is simply that – intelligence. The idea of it being “artificial” is part of a mental wall we put up to divide us from the world around us.